Visual Pattern Recognition in Algorithmic Trading: Can RL Agents “See”?

Visual Pattern Recognition in Algorithmic Trading: Can RL Agents “See”?

<—>

July 14th, 2025After over a decade developing systematic trading frameworks based on institutional order flow mechanics, I’ve arrived at a fascinating problem that sits at the intersection of human cognition and machine learning:

How do you teach an algorithm, and specifically RL agents… to see what you see?

This isn’t a philosophical question, it’s an engineering challenge with real financial implications.

The Pattern Recognition Paradox

Human traders excel at visual pattern recognition. We can glance at a chart and instantly identify support levels, trend exhaustion, or momentum shifts. We process complex visual information across multiple resolutions simultaneously—the equivalent of looking at a forest, individual trees, and leaf structures all at once.

But when it comes time to automate these insights through reinforcement learning agents, we hit a wall. The patterns we recognize so effortlessly resist straightforward translation into the numerical data streams that algorithms consume.

Consider a simple example: recognizing when a price rejection “matters.” A human trader sees a long wick at a significant level and understands the context—was this the third test of support? Did it happen at a confluence of multiple technical levels? Was the rejection violent or tentative?

An RL agent fed raw OHLCV data sees none of this. It sees numbers: open=5000, high=5025, low=4995, close=5010, volume=1000. The visual gestalt that makes the pattern significant is absent.

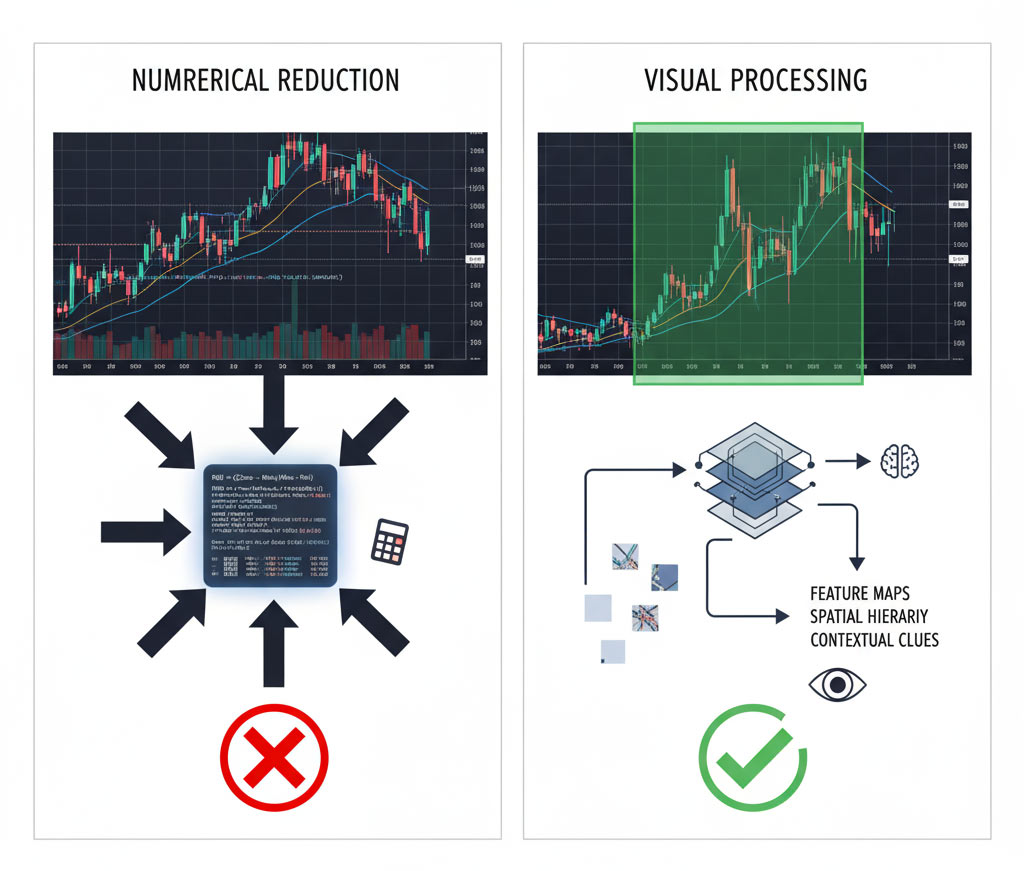

Two Approaches: Numerical Reduction vs Visual Processing

The traditional approach to algorithmic trading takes the path of least resistance: reduce everything to numerical indicators. RSI, moving averages, standard deviations—all computable, all quantifiable, all (theoretically) objective.

This works well enough for strategies based on statistical edges in price behavior. But it fundamentally limits what the algorithm can learn. You’re teaching it to play chess by the rules without ever showing it the board.

The alternative approach—teaching machines to process visual patterns the way humans do—is significantly more complex. It requires building a pipeline that preserves the spatial and contextual information that makes pattern recognition powerful in the first place.

The Visual→ML Pipeline Challenge

My current research explores this second path. The hypothesis: if visual pattern recognition provides humans with an edge in discretionary trading, then teaching machines to process similar visual information should transfer that edge to automated systems.

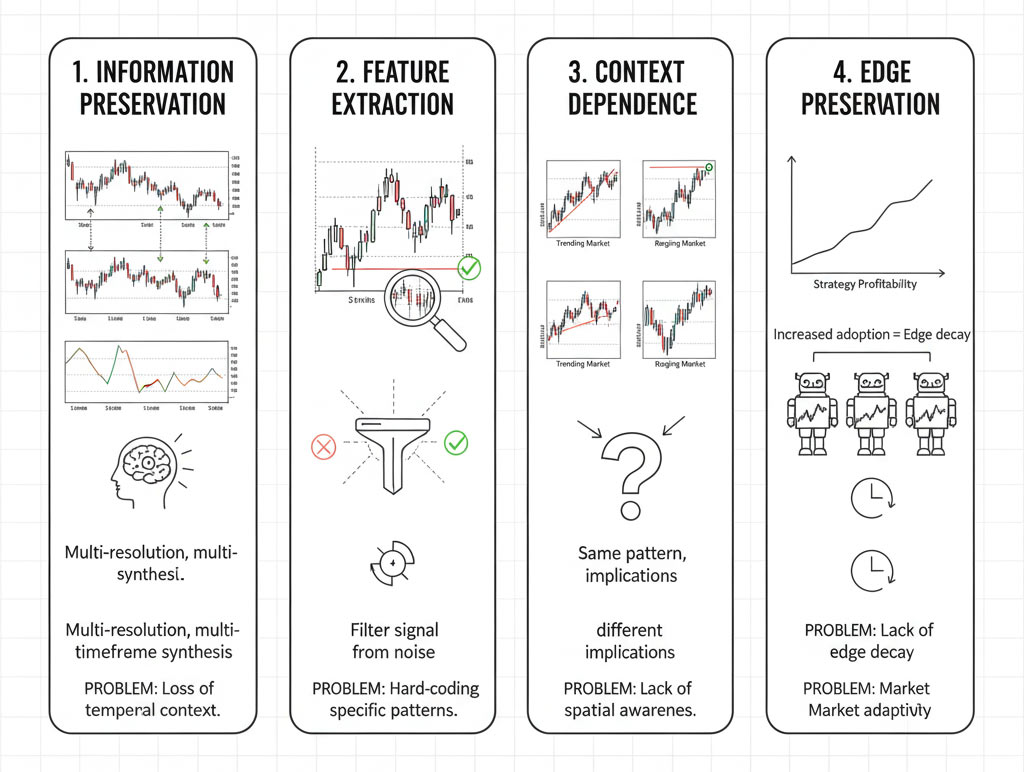

But “teaching machines to see” is more nuanced than it sounds. It’s not simply about feeding screenshots into a convolutional neural network and hoping for the best. The challenge breaks down into several distinct problems:

1. Information Preservation

How do you capture the multi-resolution, multi-timeframe nature of human chart analysis without losing the contextual information that makes patterns meaningful? A trader doesn’t just look at one timeframe—we synthesize information across multiple temporal scales simultaneously.

2. Feature Extraction

What aspects of visual patterns are actually significant? Not every wick matters. Not every support test carries equal weight. Human traders intuitively filter signal from noise. How do you codify those filters without hard-coding specific patterns (which would defeat the purpose of using ML in the first place)?

3. Context Dependence

The same visual pattern can mean different things in different contexts. A breakout above resistance in a trending market has different implications than the same breakout in a ranging environment. How do you teach spatial relationship awareness?

4. Edge Preservation

This is perhaps the trickiest problem: if you successfully teach a machine to recognize your edge, and that machine generates returns, how long before the edge degrades as the approach becomes more widely adopted?

Current Research Direction

I’m investigating a hybrid approach that combines the strengths of both paradigms. Rather than choosing between numerical indicators or pure visual processing, the architecture I’m developing uses visual pattern recognition for context and structural understanding, while maintaining numerical precision for execution decisions.

The key insight: humans don’t just see patterns—we see relationships between patterns. We recognize confluence zones where multiple technical factors align. We notice when visual structure contradicts numerical indicators (often a signal in itself). We track the persistence of certain price levels across different timeframes and sessions.

These relationship-based insights are difficult to capture in traditional indicator-based systems, but they’re potentially amenable to ML approaches that can learn hierarchical representations.

The Meta-Challenge: Human Bias in Training

There’s an additional layer of complexity: when you train an RL agent on patterns you’ve identified through visual analysis, you’re potentially encoding your own cognitive biases into the system. Confirmation bias, recency bias, narrative fallacy—all the psychological pitfalls that affect human traders can transfer to the training process.

This is both a bug and a feature. The goal isn’t to create a perfectly objective trading system (such a thing may not exist), but rather to systematize and scale the repeatable aspects of successful discretionary trading while maintaining risk controls that protect against the failure modes of both human and algorithmic decision-making.

Practical Applications

The immediate application of this research is developing RL agents that can execute systematic trading strategies with human-level pattern recognition but without human-level emotional interference. The vision: algorithms that can identify high-probability setups the way an experienced trader would, but execute them with perfect discipline.

Longer term, this work has implications beyond my personal trading operation. The human→ML knowledge transfer problem exists in any domain where visual pattern recognition provides expertise that’s difficult to codify. Medical imaging, satellite analysis, quality control—anywhere humans develop pattern recognition skills that resist simple algorithmic reduction.

Where This Goes

I’m not revealing specific patterns or methodologies here—that would undermine the competitive advantage this research is meant to create. But I’m documenting the problem space because I believe it’s genuinely interesting from both a technical and philosophical perspective.

Can machines learn to see what humans see? Not exactly—because “seeing” isn’t the right metaphor. But can we build systems that capture the essence of what makes human pattern recognition effective? That’s the real question.

And based on my work so far, the answer appears to be yes—with careful architecture, thoughtful feature engineering, and a willingness to iterate on approaches that don’t fit neatly into traditional ML paradigms.

The market will ultimately judge whether this approach produces alpha. But the intellectual challenge of bridging human visual cognition and algorithmic trading systems has been one of the most engaging problems I’ve worked on.

If you’re interested in following this research as it develops, or if you’re working on similar problems in human-AI collaboration, I’d welcome the conversation. This is genuinely frontier territory, and the best insights often come from unexpected connections between different problem domains.

Tyler Archer develops systematic trading frameworks and reinforcement learning agents for futures markets. His work focuses on institutional order flow mechanics and micro-market structure analysis.

Developing Next-Generation Quantitative Trading Systems

Developing Next-Generation Quantitative Trading Systems

I'm a systematic futures trader building complete quantitative systems and RL agents that exploit institutional order flow through visual pattern recognition, machine learning, and deep reinforcement learning.